New BARC Study: Observability is the Foundation for Trustworthy AI

BARC has published its latest study, Observability for AI Innovation – Adoption Trends, Requirements and Best Practices. Based on insights from hundreds of global data and AI professionals, the report shows how observability is evolving into a core discipline that supports privacy, transparency, and reliability in AI and machine learning.

“Observability is no longer a technical afterthought. It is becoming a strategic requirement for trustworthy AI,” said Kevin Petrie, VP of Research at BARC US and co-author of the study. “Mature AI initiatives are rooted in strong governance, and observability is the operational foundation to get there.”

AI maturity depends on observability and governance

More than two-thirds of surveyed organizations have formalized observability for data, pipelines and models. Top priorities include data privacy, auditability and model accuracy. The biggest challenges are non-technical. These include skills gaps, manual processes and siloed teams. Only 59% fully trust their model outputs, highlighting the need for stronger observability programs.

Beyond tables: observability expands to new data types

Structured data remains the primary focus, but nearly a third of respondents now observe unstructured and semi-structured data such as documents, images, videos and logs. This capability is essential for generative AI, which relies on monitoring how content is processed, vectorized and managed with metadata.

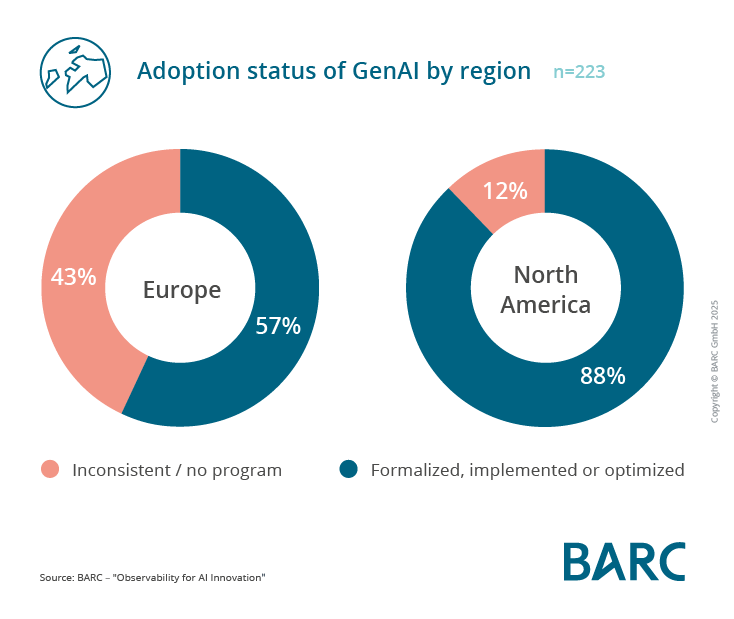

North America leads in observability maturity

Organizations in North America are significantly ahead. An average of 88% have formal observability programs in place, compared to 57% in Europe. They also monitor a broader range of data types and use more structured measurement practices. In contrast, many European firms rely on ad hoc approaches. This gap must be closed to support more scalable and production-ready AI across the region.

GenAI adoption pushes observability forward

Organizations adopting GenAI are also advancing their observability capabilities. Among GenAI users and testers, monitoring of unstructured data, vector databases and metadata is already common. GenAI introduces new requirements, but also reinforces the need for tabular data quality. Collaboration between teams and connected data landscapes are essential to keep AI models transparent and accountable.