Data Quality and Master Data Management: How to Improve Your Data Quality

Are you looking for further assistance on this topic? You will find it here.

The importance of data quality and master data management is very clear: people can only make the right data-driven decisions if the data they use is correct. Without sufficient data quality, data is practically useless and sometimes even dangerous.

But despite its importance, the reality in many of today’s organizations looks quite gloomy: data quality has been voted among the top three problems for BI software users every year since the first issue of The BI Survey back in 2002.

What is Data Quality and Master Data Management?

Data quality can be defined in many different ways. In the most general sense, good data quality exists when data is suitable for the use case at hand.

This means that quality always depends on the context in which it is used, leading to the conclusion that there is no absolute valid quality benchmark.

Nonetheless, several definitions use the following rules for evaluating data quality:

- Completeness: are values missing?

- Validity: does the data match the rules?

- Uniqueness: is there duplicated data?

- Consistency: is the data consistent across various data stores?

- Timeliness: does the data represent reality from the required point in time?

- Accuracy: the degree to which the data represents reality

In the context of business intelligence, the goal of master data management is to bring together and exchange master data such as customer, supplier or product master data from disparate applications or data silos. Master data management is needed …

- … because aside from a “master” ERP system, many companies work with other CRM or SCM systems or Web services. Master data management assures data consistency across these systems;

- … to easily integrate systems following corporate mergers and acquisitions;

- … to cooperate effectively with business partners;

- … to provide an optimal customer experience;

- … to build a 360 degree customer view that addresses customer needs in the best way possible;

- … to merge on-premise and cloud-based systems.

Why Data Quality and Master Data Management Are Important

Valid data lies at the heart of the strategic, tactical and operational steering of every organization. Having appropriate data quality processes in place directly correlates with an organization’s ability to make the right decisions and assure its economic success.

As The BI Survey data shows, companies have always struggled to ensure a high level of data quality. But in today’s digital age, in which data is increasingly emerging as a factor of production, there is growing pressure to use or produce high quality data.

Here are just a few of the growing challenges for data quality in today’s companies:

- Employees do not work with their BI applications because they do not trust them (and/or their underlying data)

- Incorrect data leads to false facts and bad decisions in data-driven environments

- If data quality guidelines are not defined, multiple data copies are generated that can be expensive to clean up

- A lack of uniform concepts for data quality leads to inconsistencies and threatens content standards

- For data silo reduction, uniform data is necessary to allow systems to talk to each other

- To make Big Data useful, data needs business context; the link to the business context is master data (e.g., in Internet of Things use cases, reliable product master data is absolutely necessary)

- Shifting the view to data (away from applications) requires a different view on data, independent from the usage context so there are general and specific requirements for the quality of data

Furthermore, there are specific characteristics in today’s business world that push the organization to think about how to collect reliable data:

- Companies must be able to react as flexibly as possible to dynamically changing market requirements. Otherwise, they risk missing out on valuable business opportunities. Therefore, a flexible data landscape that can react quickly to changes and new requirements is essential. Effective master data management can be the crucial factor when it comes to minimizing integration costs.

- Business users are demanding more and more cross-departmental analysis from integrated datasets. Data-driven enterprises in particular depend on quality-ensured master data as a pre-requisite to optimize their business process and develop new (data-driven) services and products.

- Rapidly growing data volumes, as well as internal and external data sources, lead to a constantly increasing data basis. Transparent definitions of data and its relationships are essential for managing and using them.

- Stricter compliance requirements make it more important than ever to ensure that data quality standards are met.

To make matters worse, the analytical landscapes of organizations are becoming more complex. Companies collect increasing volumes of differently structured data from various sources while at the same time implementing new analytical solutions.

This drastically increases the importance of consistent master data. Companies can only unlock the full economic potential of their data if the master data is well managed and provided in high quality.

How Important Is Data Quality and Master Data Management to Users?

Almost all respondents to the 2017 and 2016 BARC BI Trend Monitor surveys see data quality and master data management as the second or third most important trend. In a time where front end-related topics like self-service BI and data discovery get most of the attention, it seems as if BI professionals are well aware that these new technologies are only valuable if the underlying data quality is well looked after.

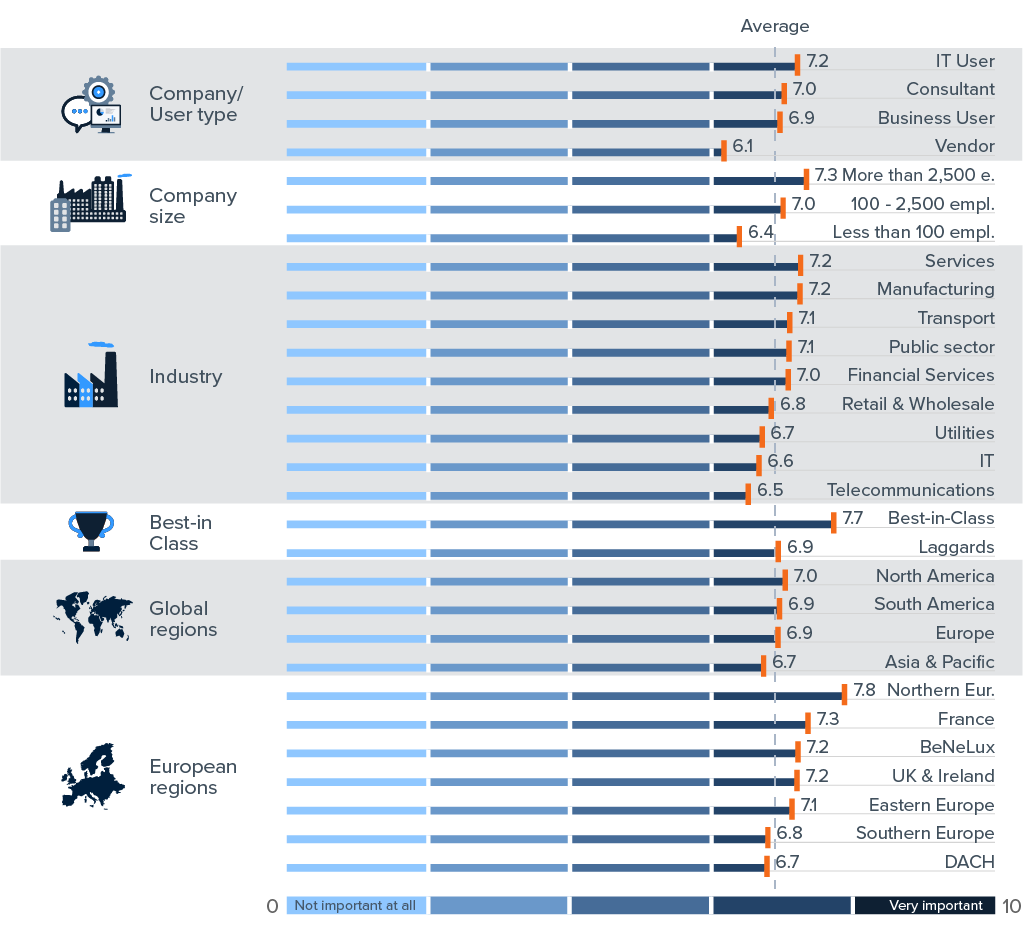

Importance of Data Quality and Master Data Management in 2017 (n=2,653)

Best-in-class, Northern Europe, and IT users rate master data & data quality management as very important. Vendors and small companies see it as less relevant.

How to Achieve a High Level of (Master) Data Quality

Successful data quality and master data management initiatives require a holistic approach.

Organizations need to address persons, processes and technology to implement the business demands on data quality:

- The organization should include clear responsibilities for data domains (e.g., customer, product, financial figures), as well as roles (data owner, operational data quality assurance / data stewards).

- Processes for data quality assurance can be defined by adopting best practices like the data quality cycle.

- Technology supports people in their processes via software features and the requisite IT architecture.

It is important to consider business obligations first and keep in mind that the organization and its processes are always more important than the technology since they are defined according to company strategy. So technology is simply there to support them.

Organization of Data Quality and Master Data Management

When it comes to improving data quality, a company culture that recognizes data as a key production factor for generating insights is essential.

In the context of data quality and master data management, the responsibility for data plays a crucial role.

Role concepts help with the definition and assignment of tasks and competencies to certain employees. By assigning certain roles, the company can ensure that responsibility for accurate data and its care is clear and enduring.

Typical roles for ensuring data quality and master data management are:

- The data owner is the central contact person for certain data domains. He defines requirements, ensures data quality and accessibility, assigns access rights and authorizes data stewards to manage data.

- The data steward defines rules, plans requirements and coordinates data delivery. He is also responsible for operational data quality, for example checking for duplicate values.

- The data manager is usually a member of the IT department. He implements the requirements of the data owner, manages the technological infrastructure and secures access protection.

- All being well, data users from business departments or IT have access to reliable and understandable data.

Each role involves clear tasks that are geared towards company-specific goals.

Processes for Data Quality and Master Data Management

The best practice process for improving and ensuring high data quality follows the so-called data quality cycle.

The cycle is made up of an iterative process of analyzing, cleansing and monitoring data quality. The concept of a cycle emphasizes that data quality is not a one-time project but an ongoing undertaking.

The data quality cycle is made up of the following phases:

- At first data quality goals or metrics needs to be defined according to business needs. These goals should form part of the overall data quality strategy as well. There should be a clear understanding of what data should be analyzed. Does a lack of completeness for some data really matter? What attributes are required for data to be complete? How can a data domain (e.g., a customer) be defined?

- Then the data is analyzed: questions like “which values can the data have?” or “is the data valid and accurate?” need to be addressed.

- The cleansing of the (master) data is normally done according to engineered individual business rules.

- Enrichment of data (with, for example, geo-data or socio-demographic information) can help with systems and business processes.

- To ensure (master) data quality is reached, continuous monitoring and checking of the data is very important. This can be done automatically via software by applying defined business rules. So at the end of the cycle, there is a fluent transition of the original data quality initiative to the second phase: the ongoing protection of data quality.

The different phases are typically assigned to the aforementioned roles.

Data Quality and Master Data Management Software

Most of the technologies on the market today are aligned with the data quality cycle and provide in-depth functionality to assist various user roles in their processes.

The best way of achieving high data quality with technology is to integrate the different phases of the data quality cycle into operational processes and match them with individual roles. Software tools assist in different ways by providing:

- Data profiling functions

- Data quality functions like cleansing, standardization, parsing, de-duplication, matching, hierarchy management, identity resolution

- User-specific interfaces/workflow support

- Integration and synchronization with application models

- Data cleansing, enrichment and removal

- Data distribution and synchronization with data stores

- Definition of metrics, monitoring components

- Data Lifecycle Management

- Reporting components, dashboarding

- Versioning functionality for datasets, issue tracking, collaboration

This list of functions is intended to give an overview of the functional range offered by current data quality and master data management tools.

Based on your company’s individual requirements, every organization should define and prioritize which specific functions are relevant to them and which will have a significant impact on the business.

The market for data quality and master data management tools is comparatively heterogeneous. Providers can be classified according to their focus or their history in the following groups:

- Business intelligence and data management generalists have a broad software portfolio which also can be used for data quality and master data management tasks.

- Specialists of service-oriented infrastructures also offer software platforms, which can be used to manage master data.

- Data quality specialists are mainly focused on how data quality can be ensured and provide tools that can be integrated into existing infrastructures and systems.

- Data integration specialists offer tools that are especially useful for matching and integrating data from different systems.

- For master data management specialists, master data management is a strategic topic. These providers offer explicit solutions and services for the management of master data.

- Data Preparation (Data Pre-Processing for Data Discovery) is a relatively new trend in the business intelligence and data management market. In the context of data quality, data discovery software can be a flexible (but not really durable) tool for business users to address data quality problems.

A Simple Piece of Advice: Just Start!

Many companies are still afraid of data optimization projects such as data quality and master data management initiatives. The organizational, procedural and technological adjustments that have to be made can seem too complex and incalculable.

On the other hand, companies that have successfully run data quality and master data management initiatives achieved their success because they simply took the decision to launch their initiative and then evolved step by step.

Companies suffering data quality problems should start by defining a short set of guidelines in which they acknowledge that the age of digitization requires rethinking and that data must be viewed as an important production factor.

According to the business or company strategy, corresponding initiatives should be launched (e.g., create centrally available master data; documented data domains, dimensions and KPIs; define contact persons and data management processes).

With the success of these initiatives (higher data quality), the company will notice improvements in its internal business processes (e.g., management and sales departments will make decisions based on correct data instead of “gut feeling”), as well as new potentials like the chance to make the transition to becoming a digitalized company that is able to develop new data-centric products and services for business partners.

Only data-driven companies can compete in the era of digitization. In the increasingly complex world of data, enterprises need reliable pillars. Reliable data is a critical factor. Ultimately, sustainable data quality management will pay off.